Multi GPU Computer

| 08/06/2025 | Written By: Kim Ippolito | Formatted/Edited By: Lauren Ippolito |

In building the AI lab, I wanted a PC with as much GPU power as possible. I opted to have one PC with multiple GPUs rather than many servers with a GPU each. Thus, when I use Distributed Data Parallel (DDP) technique for training and inference of deep learning models, I can serve the data to the GPUs from a CPU on the same bus, rather than across the network. Keeping them all together makes development, deployment, and debugging, much simpler.

Thus, my goal was to build a PC that can handle 5-6 GPUs, and then name it “Einstein”.

Getting the Motherboard

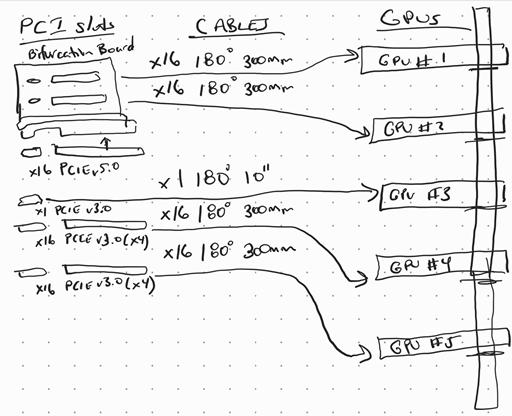

The first task was to get a motherboard that had enough PCIe slots for 5-6 GPUs. I found a lot that support 5-6 slots, but most were not of the latest PCIe version. Also, I found that the number of PCI lanes is dictated by the CPU. I ended up purchasing the ASUS ROG STRIX Z790-A Motherboard, which five PCI slots:

- V5.0 x16 slot @ x16 (which can be bifurcated into two x8 slots)

- V4.0 x16 @ x4

- V4.0 x16 @ x4

- V3.0 x4 @ x1

Thus, I can have 5 GPUs on the 5 x16 PCI slots (2 V5.0 @ x8, 2 V4.0 @ x4, and 1 V3.0 @ x1).

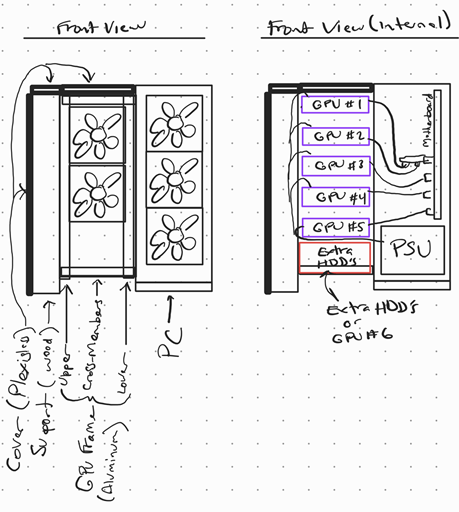

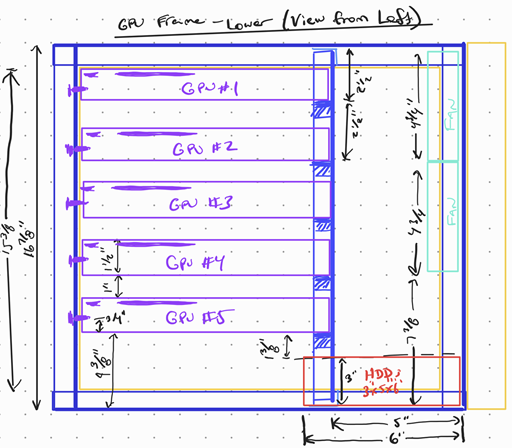

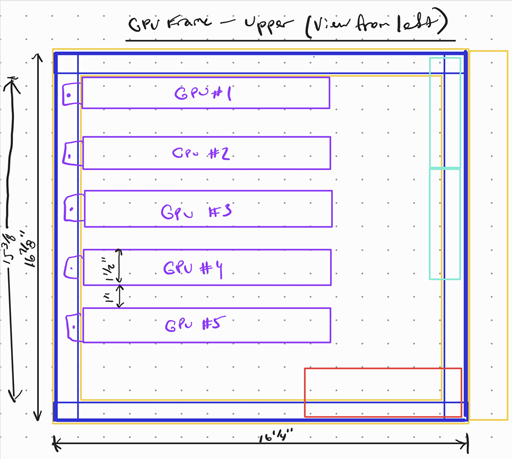

GPU Frame

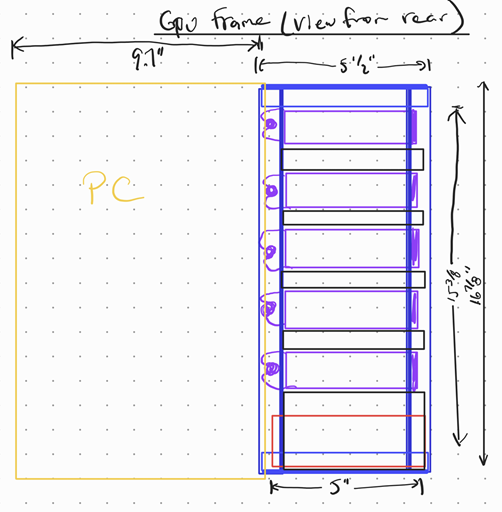

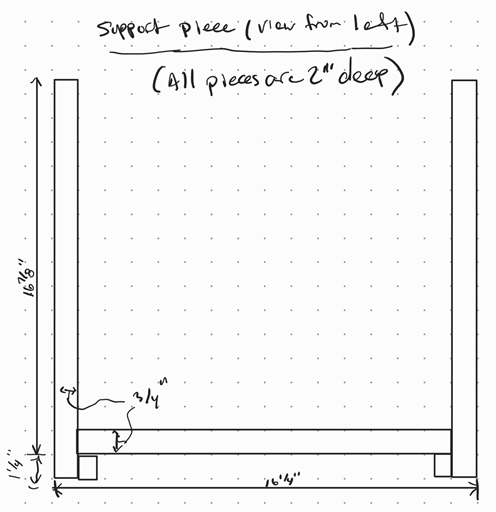

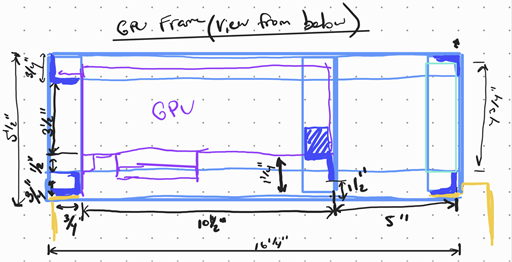

Next, I needed a frame to hold the GPUs. Thus, I created a frame using aluminum (from Home Depot), connected via rivets. I attached it to the existing computer case, so that it made my computer twice as wide. The following diagram shows how the GPU frame is attached to the PC.

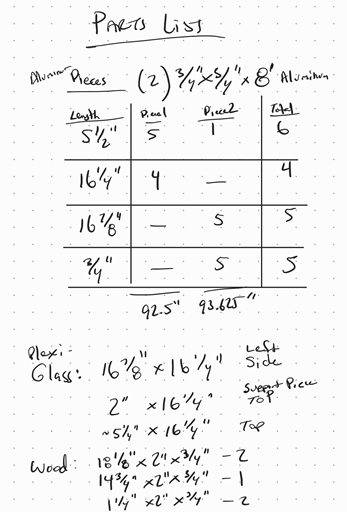

The following diagrams show the design for the GPU frame.

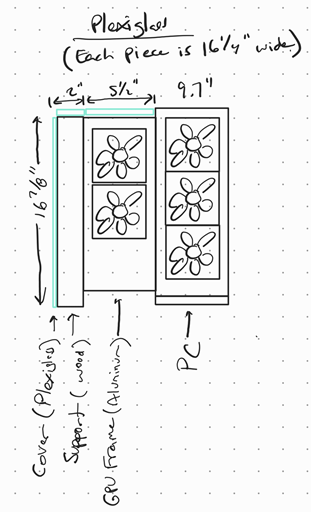

And I put plexiglass on the outside (shown on left). The full parts list is shown on right.

Connecting the GPUs

To connect the GPUs to the motherboard, I needed a bifurcation board and “extender” cables with 180-degree connectors, each around 12” long. The connections are shown below.

Power

The 1200 W power supply that I started with did not have enough GPU power cables, nor had enough wattage, to support 5 GPUs. Thus, I opted to use two power supplies, doubling my power budget. To use two power supplies in one PC, I needed to use a circuit board (which you can get from Ebay or Amazon) called a “dual PSU multiple power supply adapter” or “add2psu”.

Temperature

To control the temperature of the GPUs, I have 5 fans in front, 1 fan in back, plus the CPU fans. In the ASUS software, I set the 5 front fans and 1 back fan to be tied to the CPU and the GPU temperatures.

In addition, four of my GPUs have a blower style fan, blowing the air out of the back rather than circulating more hot air inside the PC.

This generally worked, but I did run into a problem with one of the GPUs running around 85-degrees Celsius. Thus, I replaced the GPU thermal paste and thermal pads, and the temperature reduced to 75-degrees Celsius.

Software

Since this PC is also equipped with 4 high capacity HDDs, I use this PC for several purposes beyond training Deep Learning models. Thus, unlike my Linux servers, I decided to run Windows 11 (non-server) OS on the Einstein computer.

I tend to use remote desktop from my laptop to operate this PC rather than sitting in front of it.

What would I change?

The biggest concern I had was with the data transfer speed to my 5th GPU, which utilized the final PCIe x16 @ x1 slot. As it turns out, it doesn’t seem to be a bottleneck for the type of workloads that I use, thus it hasn’t turned out to be much of a problem. But, if I were to do it again, I would probably look into a CPU that can handle more PCIe lanes (which are probably server-type CPUs).

Also, I would relook into the decision to buy blower-style GPUs rather than open-air fan GPUs. The open-air fan GPUs run at a much lower temperature than the blower-type, thus possibly improving the longevity of the GPUs.